Build smarter and more reliable AI applications using RAG

Enhance your LLMs with relevant, purposefully selected knowledge

RAG (Retrieval Augmented Generation) combines information retrieval with advanced text generation to enhance AI responses. When a user asks a question, the system first searches through a curated knowledge base to find relevant information and context. The retrieved information is then seamlessly integrated with a large language model’s processing capabilities to construct responses that incorporate both stored knowledge and generated text.

Benefits of using RAG

Minimize AI hallucinations and improve factual accuracy in application responses.

Reduce costs compared to fine-tuning by requiring less computational resources and training time.

Provide fresh context data ensuring that generated output includes up-to-date information.

How to create RAG applications with Weave

Iterate for continuous improvement

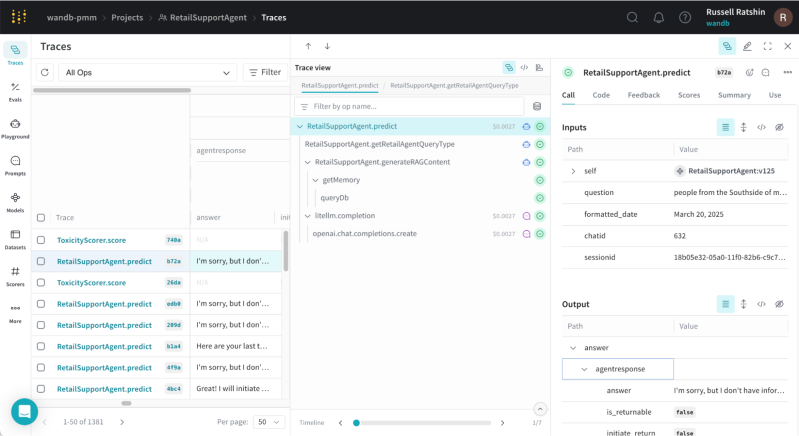

W&B Traces and W&B Evaluations allow you to record LLM inputs, outputs, metadata, and code facilitating comprehensive analysis, iteration, and optimization of your AI application.

Track everything

Weave Models combine data and code, providing a structured way to version your application so you can more systematically keep track of your experiments.

Support safety and compliance

W&B Guardrails act as real-time safety checks on LLM input and output protecting both users and your AI application from harm.

Trusted by the leading teams across industries—from financial institutions to eCommerce giants

Socure, a graph-defined identity verification platform, uses Weights & Biases to streamline its machine learning initiatives, keeping everyone’s wallets a little more secure.

Qualtrics, a leading experience management company, uses machine learning and Weights & Biases to improve sentiment detection models that identify gaps in their customers’ business and areas for growth.

Invitae, one of the fastest-growing genetic testing companies in the world, use Weights & Biases for medical record comprehension leading to a better understanding of disease trajectories and predictive risk

The Weights & Biases end-to-end AI developer platform

Weave

Models

The Weights & Biases platform helps you streamline your workflow from end to end

Models

Experiments

Track and visualize your ML experiments

Sweeps

Optimize your hyperparameters

Registry

Publish and share your ML models and datasets

Automations

Trigger workflows automatically

Weave

Traces

Explore and

debug LLMs

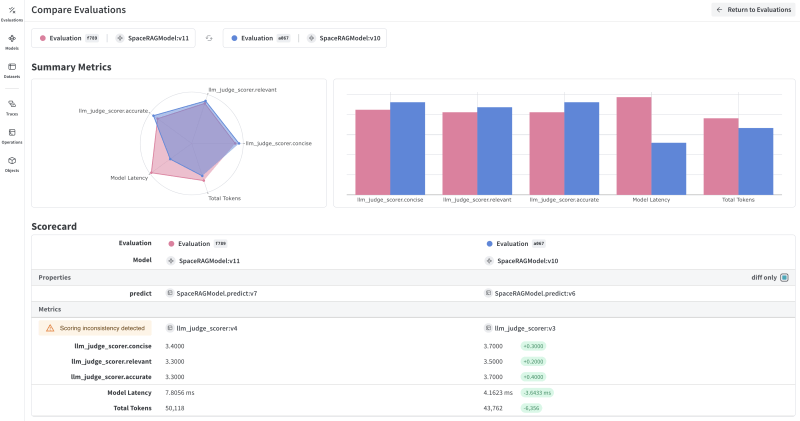

Evaluations

Rigorous evaluations of GenAI applications