How SambaNova is pioneering LLM evaluations with advanced hardware and Weights & Biases

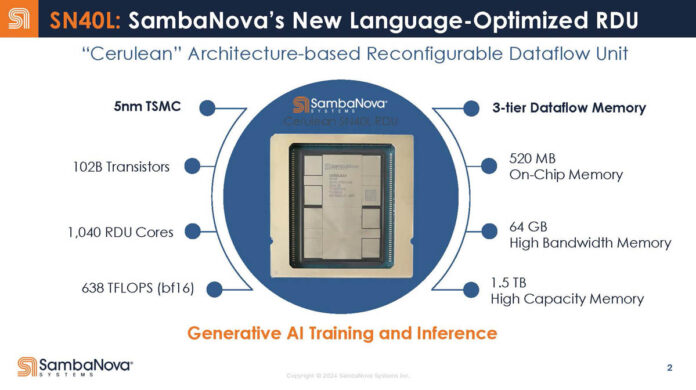

SambaNova Systems has emerged as a leader in the AI infrastructure space with their proprietary Reconfigurable Dataflow Units (RDUs) – specialized chips purpose-built for generative and agentic AI workloads. Most recently their chips delivered 198 tokens per second on DeepSeek-R1 671B, achieving 3x the speed and 5x the efficiency of the latest GPUs and the fastest inference for DeepSeek – all from a single rack.

What makes SambaNova’s technology revolutionary is their three-tiered memory architecture, featuring on-chip SRAM, RDU high bandwidth memory (HBM), and RDU high capacity DDR memory. With 100x faster model-switching than GPUs, SambaNova’s dataflow design keeps hundreds of models in memory – fueling truly Agentic AI on a single, efficient node.

“Being able to switch models in and out of the chip architecture is a big deal – that’s what allows our users to scale very well.” said architect Kwasi Ankomah. “You can have 10 models but you only need the same amount of hardware because we just pull one in and out of DDR and put it onto the chip. That’s our hardware story, we have very good hardware that really differentiates running large models at scale with a smaller footprint as well.”

Beyond its groundbreaking hardware, SambaNova Studio unifies the DataScale® system and Composition of Experts model architecture into one end-to-end AI platform, delivering unmatched scalability and simplicity. Clients can deploy and fine-tune models and manage access and resources, all from a single app.

To optimize their model training workflows and gain better visibility into performance, the SambaNova team has been using Weights & Biases Models. But as they expanded their focus to LLMs, they needed better tracking and metrics for LLMOps.

“W&B Models has been great for telling us how a model is training, but what we didn’t have visibility of was this kind of LLMOps bit, right?” said Ankomah. “Given the integration and our great experience with the Models product already, that’s why we were interested in W&B Weave, to give us a unified way of viewing not only our models workloads but our LLM inference workloads as well.”

Measuring LLM performance at scale

SambaNova initially adopted W&B Models to support their core ML workflow. Their ML engineers relied on Models to get immediate insights into model performance, to streamline experimentation and hyperparameter tuning, and to validate model performance before releasing into SambaStudio. The integration of Models into Sambastudio now gives those users access to core logging metrics.

“We want to make sure the data stays private for our end users,” said Ankomah. “W&B was very appealing because you have a lot of metrics out of the box and it’s also very easy to customize metrics and workflows as well, without worrying about data privacy or security.”

As SambaNova’s focus expanded to include inference workloads with LLMs, they faced new LLMOps-specific challenges. Ankomah and his team – which focused on building bespoke AI solutions for clients using SambaNova hardware integrated with well-known frameworks and systems like W&B – wanted to help their customers address some of these challenges, and Weave was the solution to answering many of their questions.

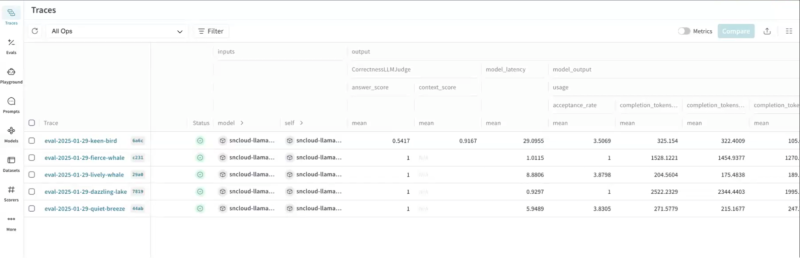

“The big thing we were trying to solve for our clients was giving them answers to questions like, ‘What’s the quality? What’s the usage? What’s the latency?’” said Ankomah. “We want to see the traces, which LLMs are being used, how many tokens are being generated. The speed, the inference benchmarks, that’s all really important for us and our clients, and Weave has been great for answering all of that.”

“When we build products around SambaNova hardware, we really care about how good these applications or LLMs or Models are, so Weave is a perfect fit for this,” said Luis Salazar, an ML Engineer on Ankomah’s team. “All the classes from Weave, the models, the assets, the evaluations, all run smoothly with our models. We also take advantage of the fast inference from SambaNova and the asynchronous property of the Weave code, so we can evaluate not only one particular solution but multiple solutions at once.”

Weave’s custom scoring framework allowed Salazar and team to iterate over their own reusable LLM-as–a-judge evaluation system. They created a custom LLM to play the judge role, and according to particular prompts they create, can evaluate answers from an LLM or RAG.

“We gave the answers from the LLM and also the ground truth answer, and Weave gives us a metric, how good the answer is, and also the embeddings as well as the context from that particular question,” said Salazar. “I think this is a really good feature that Weave has, and we took advantage of it by creating a custom LLM to evaluate our solutions. Weave was also much more scalable and user-friendly in the way that it was written, we could just pipe it into things we wanted to do. Being able to use Weave in different parts of this ecosystem was very appealing to us.”

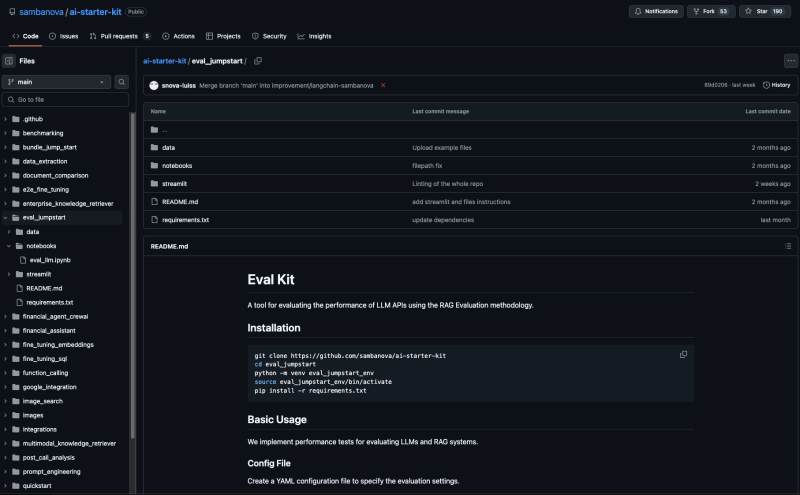

SambaNova Evaluation Kit, powered by Weave

As part of their work building bespoke solutions as requested by clients, Salazar is frequently building starter kits, based on specific functionalities. When they started getting more requests for some sort of solution that offers evaluation capabilities, Weave proved to be invaluable.

“We wanted to have a way to evaluate a lot of LLMs, and just to essentially get loads of data about certain APIs and usage metrics,” said Ankomah. “So Luis built this SambaNova Evaluation Kit, using Weave as the key framework powering this, and you get all the powerful insights from Weave – the score, the reason, things like that. We want to make it as easy as possible for a customer to evaluate their answers and be confident they have good answers.”

The open-source SambaNova Evaluation Jumpstart Kit lets users benchmark multiple LLMs for speed, cost, and accuracy, providing real-time insights and logging metrics to Weights & Biases. It is built on top of SambaNova Cloud – where enterprises can harness a lightning-fast agentic inference environment, seamlessly using the latest Open Source Foundation Model LLMs at blazing fast speeds – and powered by W&B Weave, with support for configurable datasets, vector databases, embedding models, and LLMs.

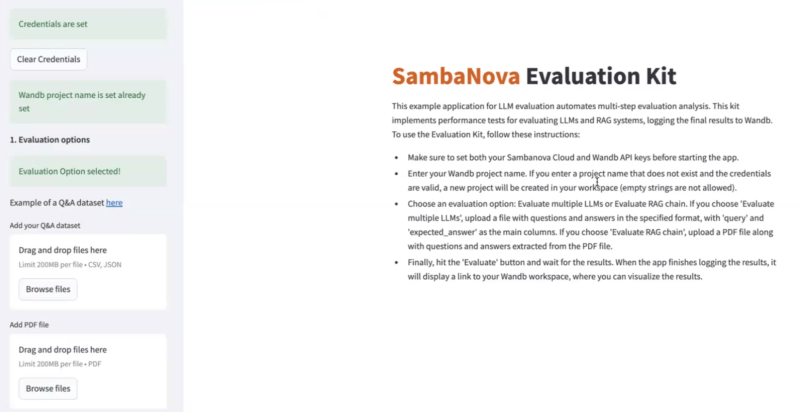

SambaNova Cloud users can now deploy this Evaluation toolkit to automate multi-step evaluation analysis and dig into performance tests, with options to evaluate multiple LLMs or a RAG pipeline. All users need to do is set their SambaNova Cloud and W&B API keys, choose an evaluation option, and drop in either a CSV file with ‘query’ and ‘expected answer’ as the main columns, or a PDF file with questions and answers extracted.

From there, with just a click of the “Evaluate” button, the toolkit will finish logging the results, and display a link to a W&B workspace where they can visualize and analyze their results.

Logging results to W&B – with metrics like acceptance rate, completion tokens, cost, input and output tokens – allows users to take advantage of W&B’s cutting-edge visualizations and tables. They can easily compare different models from SambaNova cloud and make the appropriate tradeoffs on accuracy, cost, and speed where necessary.

For example, maybe one model has better accuracy, but changing to a different model produces comparable accuracy, with much lower costs. This type of granular evaluation and analysis is paramount for AI app developers to deliver the best, most accurate solutions, while being responsible about token usage and hardware costs.

As LLM development continues at a blistering pace, SambaNova Systems represents a powerful example of how specialized AI hardware combined with sophisticated evaluation tools can drive the next generation of AI applications.

“SambaNova delivers the next evolution in Enterprise AI,” said Ankomah. “While others optimize existing architectures, we’ve built something fundamentally different – a system that manages dozens of complex models simultaneously. Access this power through SambaNova Cloud or deploy on-premise with SambaNova Studio, and use our Evaluation Jumpstart Kit to benchmark your models’ speed, cost and accuracy with metrics flowing directly to Weights & Biases for complete visibility.”