Fueling ML innovation at scale: Inside Pinterest's machine learning platform

Visual discovery platform Pinterest is one of the most popular websites today, serving nearly 600 million monthly active users as part of their company mission to “Bring everyone the inspiration to create a life they love.” This inspiration takes the form of hundreds of billions of saved Pins.

Naturally, operating at such extraordinary scale results in hundreds of millions of ML inference per second, hundreds of thousands of ML training jobs per month, all under the purview of just several hundred ML engineers. Such scale also requires a sophisticated and cohesive ML infrastructure and workflow. The previously fragmented and decentralized approach at Pinterest was creating bottlenecks that threatened to slow innovation just when the business needs it most.

Through an intentional and dedicated collaboration between Pinterest’s Advanced Technologies Group and their ML Platform team, Pinterest developed and introduced MLEnv, a standardized ML engine at Pinterest that is now leveraged by nearly every ML job at the company, and includes Weights and Biases as a core component.

At Weights & Biases annual Fully Connected event, Senior Engineering Manager Karthik Anantha Padmanabhan and Software Engineer Bonnie Han presented on the challenges that had previously bottlenecked the team, before walking through their entire workflow.

The massive scale of ML at Pinterest

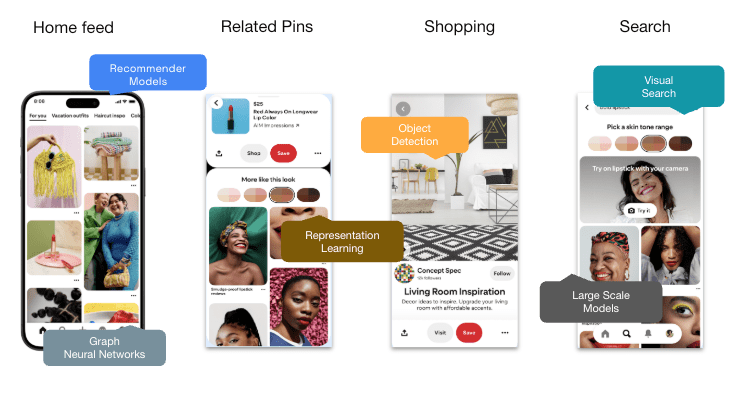

Pinterest’s massive scale across daily active users happens across four core product surfaces that define how their users discover inspiration:

Building a TTS model for enterprise customers and use cases created a whole new set of requirements and edge cases. Those customers need models that can handle:

- Home Feed: the first surface users land on, where the Pinterest algorithm shows the most relevant Pins to users

- Search: users can query via text or even an image to surface relevant Pins

- Related Pins: giving users the chance to find similar Pins to items they’ve already saved

- Shopping: embedded throughout all surfaces to find items that are available for purchase

The ML workloads across these product surface areas fall broadly into two categories, each with distinct computational demands. The Home Feed and Search rely on a classic recommendation system, with retrieval and ranking models. Working with larger models in recent years has led to better gains in terms of engagement and revenue, with a concurrent 100x growth in FLOPS as well. This has led to more demanding requirements in terms of intensive data preprocessing and real-time inference capabilities.

Meanwhile, Content Understanding Models operate in Pinterest’s pin corpus, where every Pin is indexed, and rich signals and metadata extracted out of them. These models are even more compute intensive, requiring multi-node and multi-GPU training, as well as low-latency GPU-to-GPU communication and near real-time inference.

With such training data- and GPU-intensive models, the ML platform team at Pinterest wanted to improve their workflow and infrastructure with three key goals in mind:

- Accelerate ML engineer productivity, particularly in going from ideation to AB experimentation with less friction more seamlessly

- Enable SOTA ML applications, with infrastructure that supports the growth of model sizes and complexity, and new paradigms

- Keep systems highly efficient so they can keep operating at scale. How can they keep serving latency under latency budgets, and keep costs low while serving recommendation systems on 1000s of GPUs?

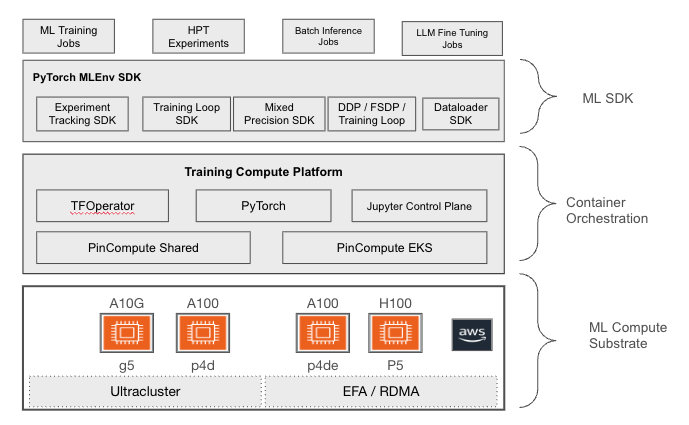

The answer: creating and implementing MLEnv, Pinterest’s ML engine, on top of a cutting-edge orchestration layer called Training Compute Platform (TCP)

MLEnv, TCP and how Weights & Biases Models supports this framework

“We had several bottlenecks for ML productivity and innovation,” explained Karthik. “A lot of development happened in silos, there was a lack of standardization – including with different frameworks, where some MLEs used TensorFlow and some used Pytorch, with no restrictions on versions. We had a hard time replicating wins, and there was a lot of redundancy and time spent reinventing the wheel.”

To address these pain points, the team pushed for a platform that was optimized for model iteration. That led to the development of MLEnv, a full-stack framework that was the result of collaboration between their Advanced Technologies Group and the ML Platform team. MLEnv sits on top of an orchestration layer called Training Compute Platform (TCP), an in-house Kubernetes-based compute platform, used to deploy MLEnv workloads. The entire system runs on top of AWS hardware.

MLEnv represents a carefully balanced approach to standardization that preserves flexibility while eliminating infrastructure friction. Centralized on a single framework (PyTorch) and model version, but giving MLEs full flexibility over their model architecture and training loop, MLEnv has been a huge boon for model iteration at Pinterest.

“MLEnv is integrated with the best and most commonly used tools that meet industry standards, such as Weights and Biases,” said Bonnie. “Upgrades have been offloaded to platform engineers so MLEs can just focus on modelling, and any improvements to the system can be quickly propagated across the unified stack. As a direct result of MLEnv, we have seen massive improvements for ML development velocity.”

MLEs at Pinterest leveraging MLEnv are able to access 150+ ML architecture and losses prebuilt, training and serving acceleration tooling, and advanced functionalities that could typically take months to implement and develop. These include hyperparameter tuning, data loading, experiment management and fault tolerance training, all of which the team also leverages Weights & Biases for.

“We’ve integrated Weights & Biases for all our experiment tracking across the organization, in addition to relying on the W&B Registry for model management,” said Bonnie. “We’ve also introduced Fault Tolerance training, which uses Weights & Biases as a checkpoint manager and sanity check.”

With a stated goal to improve experimentation, logging and tracking all experiment ideas and results have made W&B critical to Pinterest’s ML workflow. The team also relies on Artifact versioning (for the aforementioned sanity checkpoints) as well as W&B Registry as the single source of truth for model artifacts and metadata management across the organization.

Meanwhile, the team’s Training Compute Platform (TCP) serves as their orchestration layer for ML workflows. Built on top of Kubernetes, it is used by MLEnv to support single- and multi-node training, with a Python SDK to launch ML jobs that abstracts away low-level Kubernetes concepts and provides easy-to-use factory functions to construct jobs. In concert with MLEnv, TCP makes it easy and standardized for Pinterest’s MLEs to ideate, experiment, and develop.

The metrics provided through TCP (with support from W&B logging) also provides the team with a level of training observability that plays a key role in enabling and improving training efficiency efforts. The team is able to provide cost breakdowns by training and model, to identify opportunity sizing by use case. They can also slice and dice data to make more informed decisions on optimal instance selection and GPU utilization.

Developing and unifying MLEnv and TCP across the entire organization has led to huge leaps in ML innovation and massive efficiency improvements. Consolidating these platforms, supported by Weights & Biases Models, has democratized ML development and unlocked a new level of velocity.

“ML engineering is iterative, so being able to leverage these platforms and infrastructure to focus on maximizing iteration speed has been crucial for our MLEs,” said Karthik. “Instead of wasting time reinventing the wheel or being fragmented across multiple tech stacks, our team can now focus their systems knowledge and expertise on improving efficiency and unblocking key model initiatives.”