Eval-driven LLMops: Lessons from Mercari's GenAI success at scale

Mercari has emerged as Japan’s largest C2C e-commerce marketplace, boasting over 23 million monthly active users and more than 3 billion items. With a group mission to “unleash the potential in all people” and a company mission to “create value in a global marketplace where anyone can buy & sell,” Mercari’s marketplace has focused on engagement and community, delivering a delightful selling and buying experience.

An organization on the cutting-edge of innovation and technology—and longtime users of W&B Models—it’s no surprise that Mercari leapt headfirst into the opportunities afforded by LLMs and the promise of GenAI applications. They quickly established a new team dedicated entirely to developing and integrating GenAI applications for various core areas, including seller and buyer UX, customer service, and internal services.

With a pragmatic approach focused on starting small in ways that can add user value without degrading their experience, rather than going for the Holy Grail with complicated use cases, the goal was to keep iterating and learning from their experience to progress forward. And this philosophy soon led to the exploration of various use cases, all supported by Weights & Biases.

“From out-of-the-box LLM output inspection to collaboration with non-engineering stakeholders, W&B enabled rapid iteration,” said ML Engineer Yuki Yada. “If it wasn’t for W&B giving us the ability to iterate on logs and validate model outputs on edge cases, we wouldn’t have been able to get these GenAI apps out quickly and get organizational buy-in.”

GenAI applications developed at Mercari

Working with subject matter experts across different functions, the GenAI team and their partner teams across the company soon emerged with several core use cases, all supported by W&B, including:

- Mercari AI Assist (seller support) – Suggestions for titles and descriptions to help sellers improve unsuccessful listings, based on previous successful transactions. Sellers entered inputs like uploaded images and suggested categories, and are served a variety of different high-performing options including pricing suggestions.

- Mercari Dev Assist – An internal knowledge management system generating summaries and creating embeddings via LLMs to make institutional knowledge accessible. Internal sources drawn from include Slack, Microservice Wiki, and GitHub Issues.

- Metadata extraction – Fine-tuning an LLM to extract dynamically specified attributes from listing descriptions, in order to gain a deeper understanding of content written by customers, which is in turn fed back into improving Mercari AI Assist.

- Search UX (Image Score) – LLMs were used to evaluate image aesthetics and train downstream ML models to improve search results for both buyers and sellers in real time. Spearheaded by the Search ML team, this catalyzed both immediate customer value and subsequent widespread application of GenAI throughout Mercari’s Search & Discovery group.

- Mercari AI Listing Support – Automatically fills out necessary information such as the item description, condition, and price after a user takes a photo of the item and selects an item category, solving Mercari’s biggest seller pain point. Without the need to think of a title or description, users can complete the listing process in as few as three taps.

Among their many early learnings, one important discovery was that using some of the main model providers under the hood of their GenAI apps proved to be super expensive, delivered performance that wasn’t always great, and introduced some reliability concerns when building production features on someone else’s API. The team decided to fine-tune their own model, and was able to produce a model that was both better in output quality and more scalable to run in production. This discovery was a huge win internally and opened up a host of other new strategies.

“Without W&B Models, we might not have done this project at all because at that time, it was very ill-defined,” said Staff ML Engineer Teo Narboneta Zosa. “There was no way we were going to management to say, ‘Hey, let’s fine-tune a model.’ They would’ve said no way, but we showed them it was effective and we showed them it wasn’t as expensive and now it’s in production.”

W&B enables the team to quickly validate product-market fit, discover ways to improve, and spend more time adding value for the customers.

An evaluation-centric approach to GenAI

Mercari’s experience led to their discovery of a fundamental thesis about successful GenAI application: the importance of evaluations. While many organizations and GenAI teams historically focus primarily on model optimization (prompt engineering) or data enhancement (RAG systems) for improving their results, Mercari realized that high-quality evals were the true foundation for iterating quickly and thus scaling GenAI applications beyond impressive demos to production-ready and reliable systems.

“To get to the great scale we’ve achieved, you need really good evals and really good tools like W&B Weave, “ said Teo Zosa. “Focusing only on changing the behavior of the system via prompt engineering or fine-tuning prevents people from improving LLM products beyond a demo. Adding in high-quality evals and effective debugging is what creates a virtuous cycle that leads to truly great GenAI products. Good evals really depend on what the specific problem is, and that’s where you should spend a lot of your time upfront.”

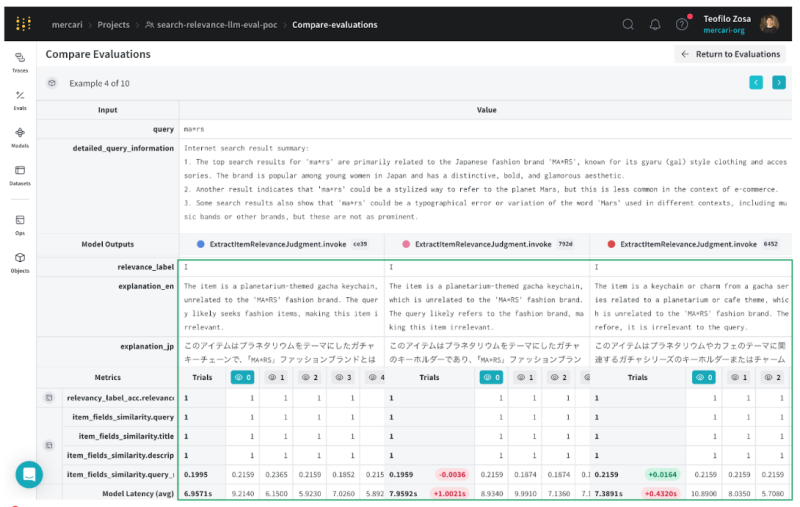

A big aspect of effective evals at Mercari is a workflow that has developers working in lockstep with subject-matter experts in the specific field or area of business for what they’re building the GenAI app for. The SMEs are there to tell the developers what is good and what isn’t, and they can work together to build not just the application but good heuristic evals with custom metrics, like in this case Japanese-English similarity, for example. The two sides then work independently but in lock-step, and then come together to achieve alignment between metrics and human feedback, and continue the iterative cycle.

The team experimented with a variety of different evaluation methods across different use cases, including heuristic metrics (with diagrams from W&B), model-based evals like G eval, using LLM-as-judge methods, or grounding and fact-checking. Doing tiered evaluations – moving from heuristic first, to LLM-based, to eventually RAG or domain expert feedback – was important to the team, as was evaluating their evals and adopting the right tools.

“Great evals and LLMOps tools are the answers for how to get iterating quickly on your GenAI apps, and the tools you use don’t have to be complicated, they just need to be able to support the needs for an evaluation-centric AI workflow,” said Teo. “W&B Weave was that solution for us, and what made it even better was it solved the problem of, ‘Can I just, in a few lines of code, do stuff?’ It was very simple and effective.”

How W&B Weave accelerated and scaled up Mercari’s GenAI

Weave immediately proved to be a huge help, out-of-the-box unlocking the first three aspects of their eval-centric workflow:

- Validating an MVP

- Integrating observability and reproducibility into a GenAI application

- Adding evals, starting with built-in scorers before creating custom metrics aligned to the problem at hand

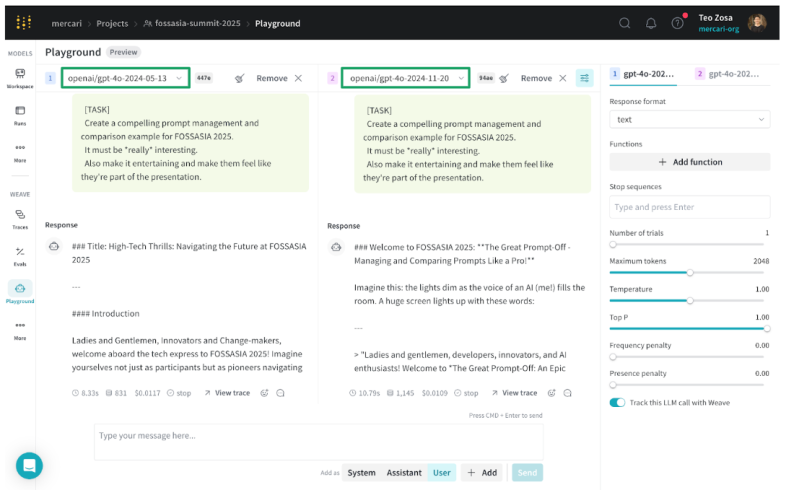

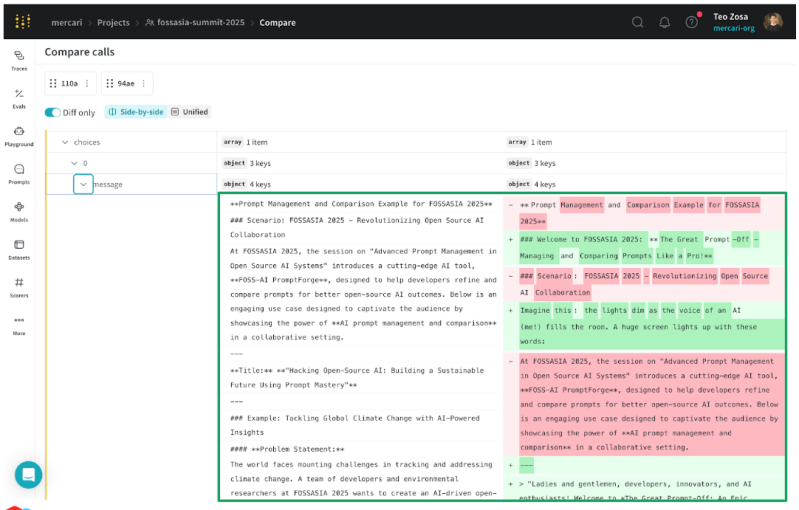

“W&B Weave gives us facilities to try prompt A vs. prompt B, against different models, and compare between those different models and see what changed,” said Teo. “Having observability in both our inputs and outputs lets us very easily narrow down what’s working best for this app in this use case.”

Reproducibility is also often considered one of the tricker aspects of machine learning, and working with LLMs is no different. Using W&B Weave, the team can very easily manage and version prompts. For example, if they run thousands of different runs without changing the prompt at all, they would only version one prompt. Similarly, if the code did change, or pre-processing logic has been tweaked, or the dataset needs to be further examined for debugging, the team now has facilities for versioning and reproducing all of that.

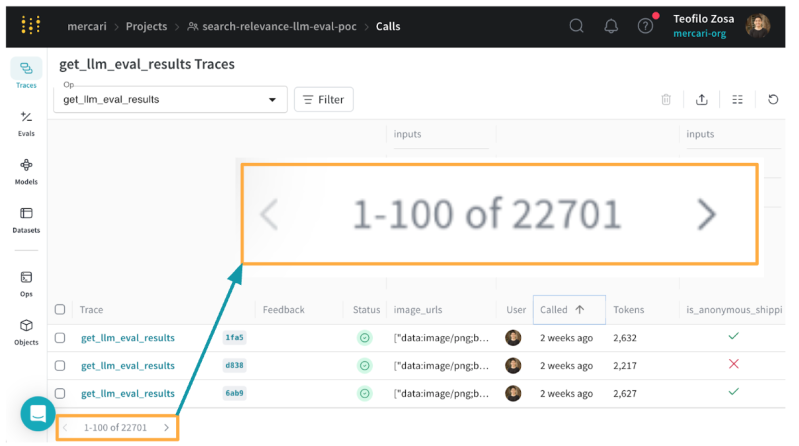

“Because Weave gives us all these facilities to make it really easy, we can iterate really quickly,” said Teo. “In two weeks, one engineer alone could have over 22,000 runs, runs that we can now track over our evaluations with metrics that show us what went up and what went down. Or qualitatively, being able to see the exact prompt output for each of these different models with the same input on the dataset we tracked earlier, with all the associated runs. Weave just makes things so much more scalable for our team; scalable both up in our dev cycles and out between multiple engineers and teams.”

And for Teo and the team, iteration speed became their competitive advantage. The W&B platform transformed their workflow into a seamless cycle of refinement: team members share evaluation results, subject matter experts provide targeted feedback on model outputs, and engineers implement precise adjustments with full reproducibility. Visibility across their entire pipeline has eliminated frustrating debugging sessions where they were essentially searching for a needle in a haystack.

“Iterating quickly is the key to success,” said Teo. “Within minutes, we can all see exactly what’s happening.We can make a decision and move forward. Debugging, tracking, reproducibility, and observability, with facilities for rapid feedback loops both sync and even async with W&B links, reports, and screenshots over Slack. That is in action, what iterating quickly and succeeding with GenAI apps looks like.”

To learn from Teo and the Mercari team’s experience, eval-centric development ensures not only a strong foundation for GenAI applications, but also serves as an enabler. Having high-quality evals embedded throughout your workflow ensures that you are fine-tuning it for the problem at hand. Coupled with sophisticated LLMOps, this eval-centric workflow is the key to moving forward in the right direction much faster for high-quality GenAI applications.