For more information or if you need help retrieving your data, please contact Weights & Biases Customer Support at support@wandb.com

Before you dive into training, it’s important to cover how LLMs scale. Understanding scaling lets you effectively balance the size and complexity of your model and the size of the data you’ll use to train it.

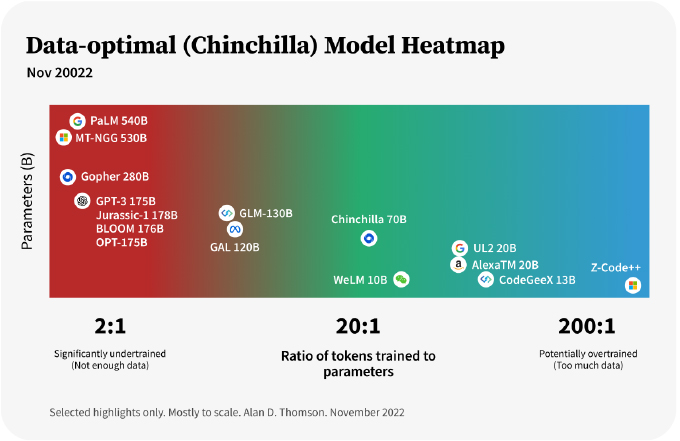

Some relevant history here: OpenAI originally introduced “the LLM scaling laws” in 2020. They suggested that increasing model size was more important than scaling data size. This held for about two years before DeepMind suggested almost the polar opposite: that previous models were significantly undertrained and that increasing your foundational training datasets actually leads to better performance.

That changed in 2022. Specifically, DeepMind put forward an alternative approach in their Training Compute-Optimal Large Language Models paper. They found that current LLMs are actually significantly undertrained. Put simply: these large models weren’t trained on nearly enough data.

Deepmind showcased this with a model called Chinchilla, which is a fourth the size of the Gopher model above but trained on 4.6x more data. At that reduced size but with far more training data, Chinchilla outperformed Gopher and other LLMs.

DeepMind claims that the model size and the number of training tokens* should instead increase at roughly the same rate to achieve optimal performance. If you get a 10x increase in compute, you should make your model 3.1x times bigger and the data you train over 3.1x bigger; if you get a 100x increase in compute, you should make your model 10x bigger and your data 10x bigger.

*Note: Tokenization in NLP is an essential step of separating a piece of text into smaller units called tokens. Tokens can be either words, characters, or subwords. The number of training tokens is the size of training data in token form after tokenization. We will dive into detailed tokenization methods a little later.

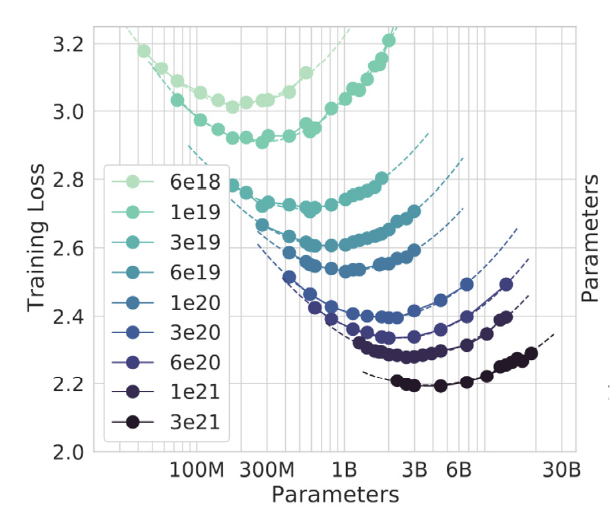

To the left of the minima on each curve, models are too small — a larger model trained on less data would be an improvement. To the right of the minima on each curve, models are too large — a smaller model trained on more data would be an improvement. The best models are at the minima.

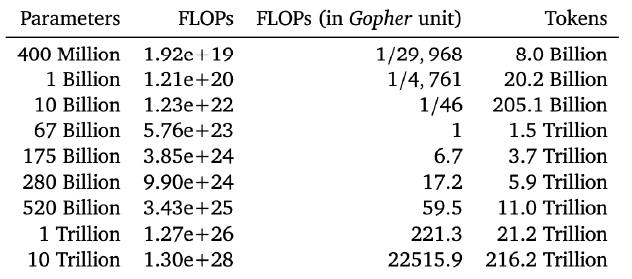

DeepMind provides the following chart showing how much training data and compute you’d need to optimally train models of various sizes.

In summary, the current best practices in choosing the size of your LLM models are largely based on two rules: