For more information or if you need help retrieving your data, please contact Weights & Biases Customer Support at support@wandb.com

It should come as no surprise that pre-training LLMs is a hardware-intensive effort. The following examples of current models are a good guide here:

• PaLM (540B, Google):

6144 TPU v4 chips used in total, made of two TPU v4 Pods connected over data center network (DCN) using a combination of model and data parallelism.

• OPT (175B, Meta AI):

992 80GB A100 GPUs, utilizing fully shared data parallelism with Megatron-LM tensor parallelism.

• GPT-NeoX (20B, EleutherAI):

96 40GB A100 GPUs in total.

• Megatron-Turing NLG (530B, NVIDIA & MSFT):

560 DGX A100 nodes, each cluster node has 8 NVIDIA 80-GB A100 GPUs.

Training LLMs is challenging from an infrastructure perspective for two big reasons. For starters, it is simply no longer possible to fit all the model parameters in the memory of even the largest GPU (e.g., NVIDIA 80GB-A100), so you’ll need some parallel architecture here. The other challenge is that a large number of compute operations can result in unrealistically long training times if you aren’t concurrently optimizing your algorithms, software, and hardware stack (e.g., training GPT-3 with 175B parameters would require about 288 years with a single V100 NVIDIA GPU).

Although we’re only a few years removed from the transformer breakthrough, LLMs have already grown massively in performance, cost, and promise. At W&B, we’ve been fortunate to see more teams try to build LLMs than anyone else. But many of the critical details and key decision points are often passed down by word of mouth.

The goal of this white paper is to distill the best practices for training your own LLM for scratch. We’ll cover everything from scaling and hardware to dataset selection and model training, letting you know which tradeoffs to consider and flagging some potential pitfalls along the way. This is meant to be a fairly exhaustive look at the key steps and considerations you’ll make when training an LLM from scratch.

The first question you should ask yourself is whether training one from scratch is right for your organization. As such, we’ll start there:

Parallelization refers to splitting up tasks and distributing them across multiple processors or devices, such as GPUs, so that they can be completed simultaneously. This allows for more efficient use of compute resources and faster completion times compared to running on a single processor or device. Parallelized training across multiple GPUs is an effective way to reduce the overall time needed for the training process.

There are several different strategies that can be used to parallelize training, including gradient accumulation, micro-batching, data parallelization, tensor parallelization, pipeline parallelization, and more. Typical LLM pre-training employs a combination of these methods. Let’s define each:

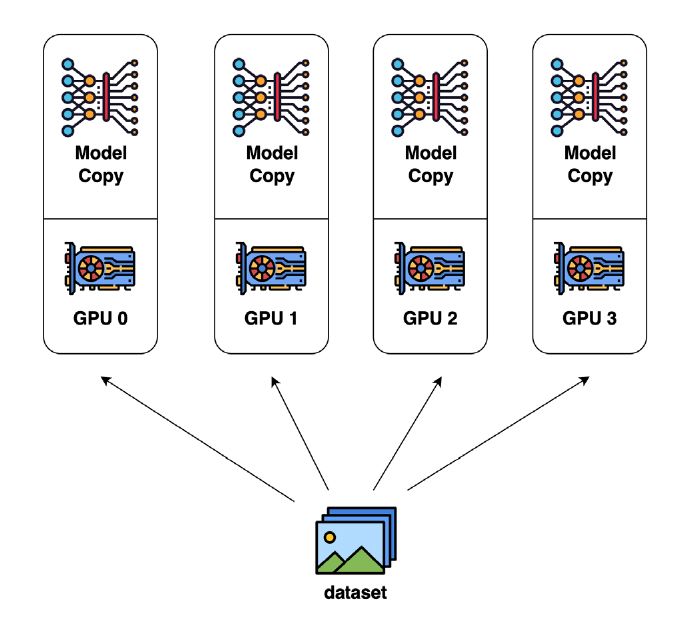

Data parallelism is the best and most common approach for dealing with large datasets that cannot fit into a single machine in a deep learning workflow.

More specifically, data parallelism divides the training data into multiple shards (partitions) and distributes them to various nodes. Each node first works with its local data to train its submodel, and then communicates with the other nodes to combine their results at certain intervals in order to obtain the global model. The parameter updates for data parallelism can be either asynchronous or synchronous. The advantage of this method is that it increases compute efficiency and that it is relatively easy to implement. The biggest downside is that during the backward pass you have to pass the whole gradient to all other GPUs. It also replicates the model and optimizer across all workers which is rather memory inefficient.