For more information or if you need help retrieving your data, please contact Weights & Biases Customer Support at support@wandb.com

Generative AI is revolutionizing the financial services industries by automating complex tasks, enhancing customer interactions, and bolstering security. In banking, generative AI models can generate predictive insights, assist in credit assessments, and streamline processes, introducing new levels of efficiency and personalization. As financial institutions embrace this technology, generative AI promises to reshape the way they operate and engage with customers, creating opportunities for innovation and growth.

Yet, the stakes in finance are uniquely high. Decisions made by AI systems can have significant consequences. Whether approving a loan, flagging a transaction for fraud, or managing risk, the outcomes of these AI-driven decisions directly impact individuals’ financial well-being, regulatory compliance, and even market stability.

Today, we will explore the benefits and challenges of generative AI in banking, and explore its transformative potential and the responsibility required to manage its impact.

GenAI’s reliance on vast datasets requires stringent privacy protections and careful data management to avoid unintentional exposure of sensitive information. Additionally, because it learns from large and diverse data, generative AI models are prone to amplifying biases, necessitating rigorous data auditing to ensure fair and ethical outcomes in areas like credit scoring.

Generative AI also holds potential for detailed explanations of its decisions in the form of natural language, which can increase transparency, but ensuring these explanations are rational and grounded, rather than fabricated or “hallucinated,” demands extensive validation. Financial institutions must carefully oversee these models to balance their benefits with the requirements for accuracy, transparency, and regulatory compliance.

Generative AI is a specialized subset of artificial intelligence that creates content or predictions by analyzing and learning from patterns in the data is was trained on. While “traditional AI” is a broad term encompassing anything from statistical methods to advanced neural networks, they typically respond within a predefined and often limited range of possibilities. Generative AI, however, can generate much more complex and nuanced outputs, allowing it to handle sophisticated tasks, like producing personalized financial advice, dynamically assessing documents, or even simulating potential fraud scenarios in banking. This capacity for high-detail, flexible responses that are easily interpreted by humans sets it apart from earlier AI models and opens up new possibilities for transformative applications in finance.

Generative AI has the potential to transform customer service in banking by analyzing individual spending patterns and behaviors to generate tailored financial insights or product recommendations. Intelligent chatbots powered by generative AI can handle complex customer queries, enabling banks to offer round-the-clock support with personalized responses. These tools may also enhance transparency by breaking down fees and alerting customers to upcoming charges, addressing common frustrations. Although not yet fully adopted across all institutions, generative AI presents substantial potential for responsive, individualized service.

Beyond customer-facing roles, generative AI holds promise in back-office operations, such as loan underwriting and document processing. By automating the review of complex applications and dynamically assessing documents, generative AI has the capacity to reduce time-to-approval and cut down on back-and-forth delays, making financial services more efficient. It could also expand credit access by evaluating a broader range of data, for unconventional applicants, thus supporting more inclusive lending practices.

For fraud detection, generative AI models have the capability to learn from large datasets, identifying anomalies and learning from spending patterns to detect suspicious activity early. This approach will help reduce unnecessary transaction blocks by distinguishing actual fraud from atypical but legitimate behavior, creating a smoother experience for customers. While regulatory and operational challenges may limit its immediate adoption, generative AI shows clear potential to redefine banking operations and elevate the customer experience.

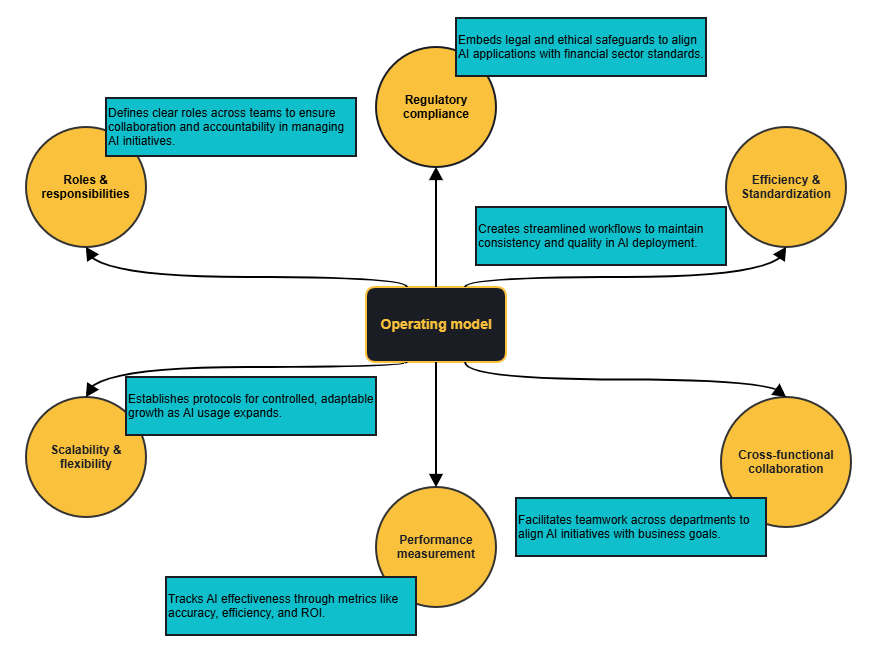

An operating model is a structured framework that outlines how an organization’s resources, processes, and responsibilities are organized to achieve specific goals. It’s essentially a blueprint for how work gets done, specifying roles, workflows, decision-making authority, and performance metrics. For financial institutions implementing generative AI, an operating model is critical to ensure that AI systems are deployed consistently, responsibly, and in alignment with the organization’s strategic goals.

In the context of generative AI in banking, an operating model helps to manage the complexity of introducing AI-driven tools and processes across various departments. It provides a clear structure for everything from data management and compliance to risk assessment and scalability. By defining clear processes and standards, an operating model enables institutions to maintain control over AI initiatives, reduce risks, and ensure that AI systems are delivering value in a sustainable and compliant way.

A well-defined operating model is important for financial institutions that want to implement generative AI in a way that aligns with organizational goals and regulatory requirements. A good framework not only supports efficiency but allows AI initiatives to be scaled responsibly, with safeguards to manage risks and uphold ethical standards. Given the complexities of integrating AI into financial operations, an operating model helps ensure that generative AI drives real, sustainable value for the organization.

Here’s what should be included with a well-structured generative AI operating model in the banking and financial services.

Clear role definitions are vital to managing generative AI initiatives, as they enable seamless collaboration and accountability. Each role, from data scientists to compliance officers, contributes to specific areas such as data privacy, ethical AI use, and model accuracy. With clear responsibilities, stakeholders can effectively manage AI’s lifecycle, addressing challenges and ensuring best practices are followed.

Compliance is foundational to any AI application in finance, where transparency, fairness, and adherence to legal standards are critical. An operating model that embeds regulatory checks and balances allows financial institutions to manage AI-driven decisions responsibly and consistently, meeting the rigorous standards for fairness, legality, and transparency that define the sector. This structured approach to compliance builds customer and regulatory trust, ensuring that AI deployments are sustainable and aligned with evolving regulatory expectations.

As generative AI solutions expand, institutions need to scale responsibly, maintaining efficiency and adaptability. An effective operating model includes protocols for managing data access, computational resources, and model performance as AI usage grows. Flexible scalability within this framework allows institutions to adapt to regulatory or technological changes and adjust AI implementations based on real-world results, facilitating a controlled and sustainable growth process.

Monitoring performance is critical to assessing the effectiveness of generative AI in banking. An operating model with well-defined metrics—such as accuracy, efficiency, compliance, and ROI—helps institutions track performance, make informed improvements, and ensure consistent quality. Performance metrics allow organizations to assess the tangible impact of AI deployments and guide data-driven adjustments to optimize value.

An effective operating model for generative AI in banking is essential for addressing the common challenges that arise in deploying and managing AI solutions within a regulated, high-stakes environment. Implementing AI at scale involves overcoming hurdles related to data quality, model risk management, regulatory compliance, and cross-functional integration. A structured operating model enables institutions to tackle these issues by establishing standardized workflows and best practices, streamlining the AI deployment process and ensuring its long-term sustainability.

Generative AI requires a blend of expertise across areas like data science, compliance, risk, and customer service. A structured operating model facilitates cross-functional collaboration, ensuring that AI solutions are applied thoughtfully and align with business objectives. Understanding the nuances of business processes is extremely important, as misapplying AI to the wrong areas can lead to inefficiencies or even negative outcomes. A collaborative model strengthens AI’s alignment with organizational goals, maximizing its value.

Generative AI is opening new opportunities in banking, transforming everything from how banks assess risk to how they engage with customers and streamline regulatory processes. These advancements are helping banks not only optimize their operations but also adapt to a rapidly changing financial landscape. Below are some of the primary areas where generative AI is being applied in the financial sector today.

Generative AI’s ability to learn and relay insights from vast datasets provides banks with invaluable insights for more effective risk management. By analyzing historical data patterns, these models enhance predictive accuracy for areas such as fraud detection and loan approval, empowering banks to make informed, proactive decisions. Generative AI models help financial institutions better assess credit risks, forecast economic trends, and navigate regulatory compliance. This allows banks to make timely adjustments, minimizing potential risks that could impact both operational stability and customer trust.

Generative AI can improve the credit approval and loan underwriting process by assessing applicants’ creditworthiness with greater accuracy. Equipped with a “common sense” reasoning (under well-defined scenarios), AI models can handle complex cases that traditional models often overlook, such as applicants with non-traditional income sources or unique financial histories. This enhanced flexibility allows for more inclusive lending practices, expanding access to credit without compromising the accuracy of evaluations, and providing underserved customer segments with more opportunities.

Regulatory compliance is a complex requirement for banks, with significant time and resources devoted to audits, risk assessments, and reporting. Generative AI can support these efforts by automating data processing, thereby reducing manual oversight and increasing accuracy in meeting compliance standards. Automated “watchdog” systems can continuously monitor for regulatory issues, flagging potential breaches early and helping banks stay ahead of compliance obligations while maintaining high standards of accuracy.

In the finance sector, security and fraud detection are critical priorities, and generative AI plays a pivotal role in strengthening these functions. By analyzing transaction patterns and identifying anomalies, AI can detect suspicious behavior more quickly and accurately than traditional systems. These models bring a heightened level of “common sense,” allowing them to better distinguish between fraudulent activity and legitimate but unusual customer behavior, such as high-value purchases or international transactions.

Beyond fraud detection, generative AI can support direct customer engagement when suspicious transactions arise, facilitating prompt outreach to clarify issues and confirm account security. This end-to-end capability enhances customer trust by making security measures both seamless and responsive. With proactive threat management, banks can safeguard their customers more effectively while ensuring compliance with stringent security standards that continue to evolve alongside digital banking trends.

Generative AI enables banks to streamline operations by automating routine yet resource-intensive tasks, such as data entry, document processing, and customer onboarding. This automation minimizes the potential for human error, speeds up processing times, and reduces labor costs, making daily operations significantly more efficient. With generative AI taking on administrative tasks, employees are freed to focus on high-value activities, such as strategy and customer engagement, which enhances both productivity and organizational focus.

In addition to handling repetitive tasks, generative AI can assist in generating reports and financial summaries, providing decision-makers with timely insights without additional manual effort. This operational support allows banks to reduce overall costs and improve service delivery, creating a more streamlined and responsive approach to back-office functions. As a result, banks can operate more efficiently and dedicate more resources to customer-facing initiatives and strategic priorities.

Generative AI empowers banks to offer a highly personalized experience, whether through tailored financial advice, intelligent chatbots, or predictive financial modeling. By analyzing customer spending patterns, generative AI can generate customized investment plans, personalized spending recommendations, and insights that reflect each customer’s unique financial situation. This personalized approach fosters stronger customer relationships and increases loyalty by providing value that goes beyond traditional banking services.

Additionally, generative AI-powered chatbots can respond to complex customer queries 24/7, offering accurate and personalized assistance around the clock. These AI-driven agents can handle a range of requests, from basic account inquiries to more detailed financial advice, creating a seamless and responsive customer experience. With these enhancements, banks can differentiate themselves in a competitive marketplace, providing a level of service that adapts to individual needs and expectations in real time.

While generative AI offers transformative benefits for financial institutions, its adoption also introduces unique challenges and risks. In finance, where sensitive data, compliance, and ethical standards are paramount, AI must be deployed with heightened caution and rigor. The following sections explore some of the most pressing challenges in leveraging generative AI in banking.

Data privacy and security are top priorities in finance, where banks handle highly sensitive customer information protected by strict regulations. Generative AI pipelines, which require access to vast datasets to learn effectively, must ensure robust data encryption, privacy protocols, and data access protocols to prevent unauthorized use and data breaches. Compliance with regulatory standards, like GDPR, adds another layer of complexity, especially when dealing with cross-border data transfers and large data volumes. Financial institutions must strike a balance between AI’s data needs and the responsibility to safeguard customer information.

Additionally, security protocols must be proactive and regularly updated to counteract evolving threats, such as cyberattacks targeting AI models and the data they handle. Continuous monitoring, advanced encryption, and secure access controls are essential to protect sensitive data while maintaining AI’s operational effectiveness.

Generative AI models depend heavily on the data they are trained on, and if this data contains biases, the AI can inadvertently reinforce unfair outcomes in financial decisions. This is particularly concerning in applications like credit scoring, lending, and investment recommendations, where biased models could disproportionately impact certain demographics. Financial institutions have an ethical and legal responsibility to address these biases to ensure that AI applications treat all customers fairly.

With generative models, which typically rely on large datasets, achieving a balanced data distribution across all demographic groups can be particularly challenging. The scale of data required for these models makes it difficult to guarantee equal representation, increasing the risk of unintentional bias. Addressing this requires rigorous data selection and bias-mitigation techniques, such as re-sampling or re-weighting datasets. Additionally, tools like Weights & Biases can support transparency in model performance and bias analysis, helping banks identify and address areas where AI may unintentionally discriminate. Regular audits and continuous evaluation are essential to uphold ethical standards and prevent discriminatory practices, ensuring that AI-driven financial decisions remain fair and equitable.

Many financial institutions rely on legacy systems that weren’t designed for the complex data requirements and computational power that generative AI demands. Integrating AI with these older systems can lead to significant technical challenges, including compatibility issues, security vulnerabilities, and operational disruptions. This integration often requires substantial resources, infrastructure upgrades, and a clear migration strategy to avoid costly errors and ensure smooth transitions.

To address these challenges, banks need careful planning and, in most cases, incremental upgrades to infrastructure that allow for safer and efficient AI implementation. Compatibility testing and phased deployment can also help minimize disruption, allowing AI applications to work effectively alongside legacy systems without compromising performance.

Generative AI models, particularly large language models (LLMs), are often referred to as “black boxes” because the logic behind their decisions is difficult to interpret and explain. This lack of transparency poses a unique challenge for banks, where explainability is critical to regulatory compliance and customer trust. Generative AI models also introduce a level of unpredictability, or *stochasticity*, which refers to the random variability in outputs. Even when the similar inputs are used, small changes in the input or environmental factors can lead to different outputs, making consistent decision tracking a challenge.

With LLMs, this stochastic nature is partially controlled by a setting called temperature, which influences how deterministic or random the model’s responses are. When temperature is set to 0, the model is more deterministic, producing consistent outputs for the same input. However, at any temperature above 0, the model begins to introduce more variability, allowing for creative or diverse outputs. This randomness can be useful in some contexts but becomes problematic in financial applications, where precise, explainable results are essential.

Model drift occurs when an AI model’s performance declines over time due to changes in the underlying data patterns it was trained on. This drift can happen in two ways: concept drift and data drift.

In finance, where accurate predictions are essential, model drift can lead to suboptimal decisions. For example, a model that assesses credit risk based on past economic conditions may struggle to adapt to a sudden downturn, potentially impacting risk assessments and lending decisions. This can have broad implications, affecting everything from risk management strategies to customer satisfaction.

In the highly regulated banking sector, generative AI applications must strictly adhere to financial regulations to prevent misuse and uphold ethical standards. Compliance involves building safeguards into AI applications, from data privacy controls to bias mitigation, and continuously monitoring for adherence to legal and ethical requirements. The consequences of non-compliance are severe, ranging from regulatory fines to reputational damage and loss of customer trust.

Ethical considerations also play a significant role in AI deployment. Financial institutions must evaluate how AI impacts customers and ensure that its use aligns with both corporate values and legal standards. By implementing robust compliance monitoring, banks can detect and address potential issues proactively, building trust with both regulators and customers.

Scaling generative AI in banking requires a thoughtful approach that balances infrastructure investments, governance, and risk management to handle the complexity and sensitivity of financial data. As banks expand their AI initiatives, they must consider the resources and frameworks necessary to support larger, more integrated AI systems. Effective scaling involves not only the technical capability to manage high volumes of data and computations but also the structures that ensure AI systems operate safely, compliantly, and ethically.

To scale generative AI effectively, banks need to invest in optimized infrastructure, including cloud-based platforms and high-capacity data processing systems, to meet the intensive computational needs. Cloud infrastructure provides flexibility, allowing resources to scale in sync with the growth of AI applications. However, not all cloud providers are equal in terms of cost, performance, or flexibility, and selecting the right provider is essential. Allocating resources to evaluate cloud providers based on pricing, service quality, and support can lead to cost savings and more efficient operations in the long term.

Additionally, reducing vendor lock-in during the infrastructure design phase can offer significant benefits down the line. Designing infrastructure to be flexible—capable of operating across multiple providers or allowing easy migration—gives institutions the freedom to adapt as market conditions or service offerings change. This approach helps avoid reliance on a single vendor and ensures that scaling remains cost-effective and adaptable as the bank’s AI capabilities grow.

Weights & Biases can support this scaling by providing a unified platform for tracking model training, evaluating performance, and documenting experiments, allowing teams to manage scaled AI applications cohesively. This centralized system fosters efficient collaboration across departments and helps maintain oversight as AI usage grows, ensuring a streamlined and effective scaling process.

To scale generative AI responsibly, banks must implement effective risk management practices that address the unique challenges posed by AI. A core component of this is understanding the fundamental capabilities and limitations of the underlying technology. A deep comprehension of generative AI’s strengths and weaknesses allows institutions to make informed decisions about when and how to use it, ensuring that AI is deployed in ways that align with strategic goals and operational realities. This foundational knowledge helps avoid inappropriate applications that could lead to biased outputs, inefficiencies, or compliance risks.

Having the right personnel in place, with expertise spanning technological, regulatory, and ethical risk areas, further strengthens risk management. A team that combines skills in AI technology, regulatory compliance, and ethical considerations provides comprehensive oversight, allowing banks to anticipate and address potential risks across these critical domains. This multidisciplinary approach helps ensure that AI is deployed thoughtfully, balancing innovation with safeguards that protect both the institution and its customers.

As AI applications expand, implementing monitoring systems to detect issues like model drift, data biases, and security vulnerabilities is essential. Early detection allows banks to intervene promptly, minimizing risks to customer trust and regulatory compliance. Tools like W&B Weave can help by enabling continuous tracking of model inputs, outputs, and performance metrics, providing a centralized platform for analyzing results and identifying anomalies. This approach ensures that potential issues are quickly addressed, helping maintain the integrity and reliability of AI systems in banking.

A thorough risk management framework considers the ethical, operational, and regulatory implications of scaled AI, establishing a foundation for safe, compliant growth. Regular oversight and continuous learning ensure that AI systems remain aligned with industry standards, operate ethically, and meet institutional priorities as they scale.

Generative AI is positioned to play an increasingly transformative role in the banking sector, reshaping how institutions address common customer pain points, streamline operations, and make financial services more accessible.

As banks continue to adopt AI technologies, the potential for meaningful improvements in transparency, customer service, and inclusivity becomes more tangible. While there are numerous possibilities, several core areas stand out as key opportunities where generative AI can drive impactful change over the medium term.

For complex processes like loan applications, lengthy approval times can hinder customers’ ability to make timely financial decisions. Small business owners, for instance, may struggle to secure necessary capital if approval processes take weeks. Generative AI can streamline these processes by dynamically assessing submitted documents, identifying missing or incomplete information, and promptly raising any issues, reducing delays that would typically require manual intervention. This proactive approach prevents unnecessary back-and-forth and speeds up approvals, allowing customers to access funds more quickly to support their business or personal financial needs.

When dealing with urgent issues like a stolen debit card, customers need quick, reliable support. Traditional customer service systems, however, often result in long wait times, multiple transfers, and inconsistent information. AI-driven customer service agents can potentially handle these cases end-to-end, from triaging and routing high-priority issues to resolving them or escalating when needed. Generative AI can ensure that urgent cases are fast-tracked, delivering timely and accurate assistance, reducing wait times, and creating a more satisfying support experience for customers.

Traditional credit evaluation models tend to overlook non-traditional income earners, such as self-employed individuals or gig workers, who may have stable but unconventional income streams. Generative AI has the potential to make credit evaluations more inclusive by analyzing a wider range of data points, including cash flow consistency, business history, and contract reliability, to form a more comprehensive view of creditworthiness. Additionally, AI-driven systems can recommend further investigation strategies, like specific follow-up questions or additional documentation requests, allowing for a more tailored assessment. This approach could help more individuals and small business owners qualify for financial products like mortgages and loans, expanding access to credit for diverse customer groups.

Current fraud detection systems can sometimes be overly cautious, frequently blocking legitimate transactions that don’t fit a customer’s typical spending pattern. This is particularly disruptive for customers who are traveling or making unusual purchases. Generative AI can improve fraud detection by learning and adapting to each customer’s behavior, distinguishing actual fraud from atypical but legitimate activity. This personalized approach reduces unnecessary transaction blocks, allowing for a smoother experience without compromising security.

These core opportunities illustrate how generative AI can help banks address specific challenges, fostering more transparent, inclusive, and efficient banking practices. By focusing on these areas, banks can leverage AI to enhance customer relationships, expand access to financial services, and provide a more personalized and responsive experience in the years ahead.

Customers are often frustrated by hidden fees or complex terms, such as unexpected charges for services like paper statements, foreign transactions, or account maintenance. This lack of transparency can damage trust, especially when key details are buried in fine print. Generative AI can address this by providing clear, easy-to-understand breakdowns of fees and terms, using straightforward language that helps customers make informed decisions. Additionally, AI-driven reminders about upcoming charges, particularly for new customers, could reduce unpleasant surprises and promote a more transparent relationship between banks and their clients.

Another (more technical) potential direction for generative AI in banking involves hybrid architectures that blend the capabilities of large language models with traditional AI methods like decision trees or rule-based systems. This approach combines the predictive power of advanced models with the interpretability of simpler algorithms. For example, while an LLM can analyze complex patterns and generate nuanced predictions, a decision tree or rule-based layer can help break down these outputs into transparent, step-by-step explanations.

By incorporating explainable models within a generative AI architecture, banks can leverage the strengths of LLMs while maintaining the clarity and accountability needed in regulated environments. This setup allows banks to understand and justify AI-driven decisions to regulators and customers alike, providing a pathway to greater transparency. Hybrid architectures could bridge the gap between advanced technology and operational clarity, offering a balanced approach for responsibly scaling generative AI in banking.

As generative AI reshapes banking, its full potential lies not just in the technical advancements it offers but in the broader transformations it enables. Embracing this technology with rigor and responsibility can drive significant progress in accessibility, transparency, and customer engagement. However, as banks adopt generative AI, they must remain mindful of the ethical, regulatory, and operational challenges unique to the sector. Balancing innovation with caution will ensure that generative AI advances in ways that truly benefit customers and society as a whole.

Looking forward, financial institutions have the opportunity to pioneer hybrid and explainable AI architectures that blend advanced prediction capabilities with greater transparency. These models could set new standards for accountability, allowing banks to deliver both cutting-edge service and trustworthiness in equal measure. Generative AI’s future in banking will be defined not only by its capacity to streamline and innovate but by its role in fostering a more inclusive, fair, and resilient financial landscape. As this journey unfolds, institutions that build responsibly will be well-positioned to lead and redefine the banking experience for generations to come.