For more information or if you need help retrieving your data, please contact Weights & Biases Customer Support at support@wandb.com

AI agents represent a paradigm shift in finance and banking, evolving beyond static predictive models to autonomous entities. These systems can perceive environments, reason through complex scenarios, and execute decisions with bounded autonomy.

However, in a regulated sector, this agency requires a new standard of oversight. By using W&B Weave as a centralized trace-and-governance layer, institutions can ensure that every autonomous action is safe, observable, and backed by explicit approval controls for high-stakes outcomes.

AI agents combine an LLM with tools, memory, and policy-controlled actions to execute workflows and not just generate text. In banking, the near-term ROI is highest for read- and audit-heavy processes like KYC intake, fraud triage, compliance drafting, and research summarization.

The core risks are unsafe actions, data leakage, drift, and audit gaps. Success depends on a robust governance stack: tool allowlists, RBAC, and sandboxing, underpinned by W&B Weave to provide the immutable execution traces required for regulatory compliance.

The pragmatic path is to start with read-only or constrained-write pilots, define measurable KPIs (cycle time, false positives, override rate), and expand autonomy only after observability and incident response are mature. By maintaining a centralized record of all agent reasoning, institutions can transform “black box” AI into a transparent, auditable digital workforce.

We are currently witnessing the next major shift in how AI is applied in production systems: from models that predict, to systems that generate, to systems that can plan and act within defined controls.

Most teams in banking and finance progress through a few clear stages when adopting AI agents into their workflows:

The transition gate: Moving from Stage 1 (Assistant) to Stage 2 (Constrained Agent) and beyond requires a shift from simple logging to full execution traceability. Using W&B Weave, teams can capture every pilot run as a “system of record,” providing the evidence that risk committees need to approve higher levels of autonomy.

From advisor to actor: In the financial sector, this shift is profound. We are moving away from AI that acts merely as an “advisor” (e.g., a chatbot suggesting a portfolio change) toward AI that functions as an “actor”—but crucially, with bounded autonomy, permissions, and oversight.

| Traditional AI in finance | Agentic AI in finance |

|---|---|

| Reactive, task-specific (e.g., fraud scoring) | Proactive, goal-directed (e.g., autonomous portfolio rebalancing within policy) |

| Human-dependent workflows | Autonomous loops with self-correction and approvals |

| Static data analysis | Dynamic interaction with markets/tools |

Unlike traditional models focused on narrow pattern recognition, agentic AI systems embody “agency.” It doesn’t just process data; it pursues goals. By integrating LLMs with external tools, memory, and APIs, these agents can autonomously execute plans, handle market volatility, and optimize resources in real-time within explicit constraints.

For those of us building these systems, the leap from passive generation to autonomous action introduces new challenges. The focus is shifting from simple model accuracy to complex reliability, governance, and safety, with auditability and control as first-class requirements.

Welcome to the world of agentic finance.

Let’s first clear up a common misconception: Agentic AI is not just a rebranded large language model. While LLMs act as the reasoning engine (essentially the “brain”) an AI agent is the entire body around it, capable of interacting with the world.

Think of generative AI as a system that can write a report for you, whereas an agentic system is like a digital employee that can research the data, write the report, and then email it to the compliance officer. This creates a shift from “help-me” chatbots to “do-it-for-me” autonomous systems.

At a functional level, a financial AI agent operates on a continuous cognitive loop, mimicking the workflow of a human analyst. It generally follows a four-step cycle:

Perception (the eyes and ears): The agent doesn’t just wait for a chat prompt. It actively ingests data from its environment, such as real-time market feeds, transaction logs, internal documents, and API streams.

Reasoning & planning (the brain): Using the LLM, the agent evaluates the situation through structured planning, weighs options, and decomposes a high-level goal (e.g., “minimize portfolio risk”) into a logical, step-by-step plan.

Action (the hands): This is where the magic happens. The agent interfaces with external tools – executing trades via APIs, querying a vector database to check compliance rules, or updating a KYC file in the core banking system – only where policy and permissions allow.

Learning & adaptation: Finally, the agent observes the results of its actions. Did the trade go through? Did the risk parameter adjust correctly? It adapts its strategy in real-time based on the outcome.

Industry leaders like Deloitte describe these as systems that “independently reason and execute complex tasks,” while Salesforce emphasizes their ability to personalize services and handle compliance autonomously.

Architecturally, these “agents of change” often rely on frameworks such as ReAct (Reason + Act) or operate within multi-agent systems in which specialized agents collaborate. Picture a fraud detection agent automatically flagging a transaction and asking a separate compliance agent to verify the user’s history.

The bottom line: Standard generative AI provides outputs; Agentic AI provides outcomes. It decides and acts, though with human oversight for high-stakes decisions.

How do you safely give an AI access to real-time market data, internal calculators, and core banking systems?

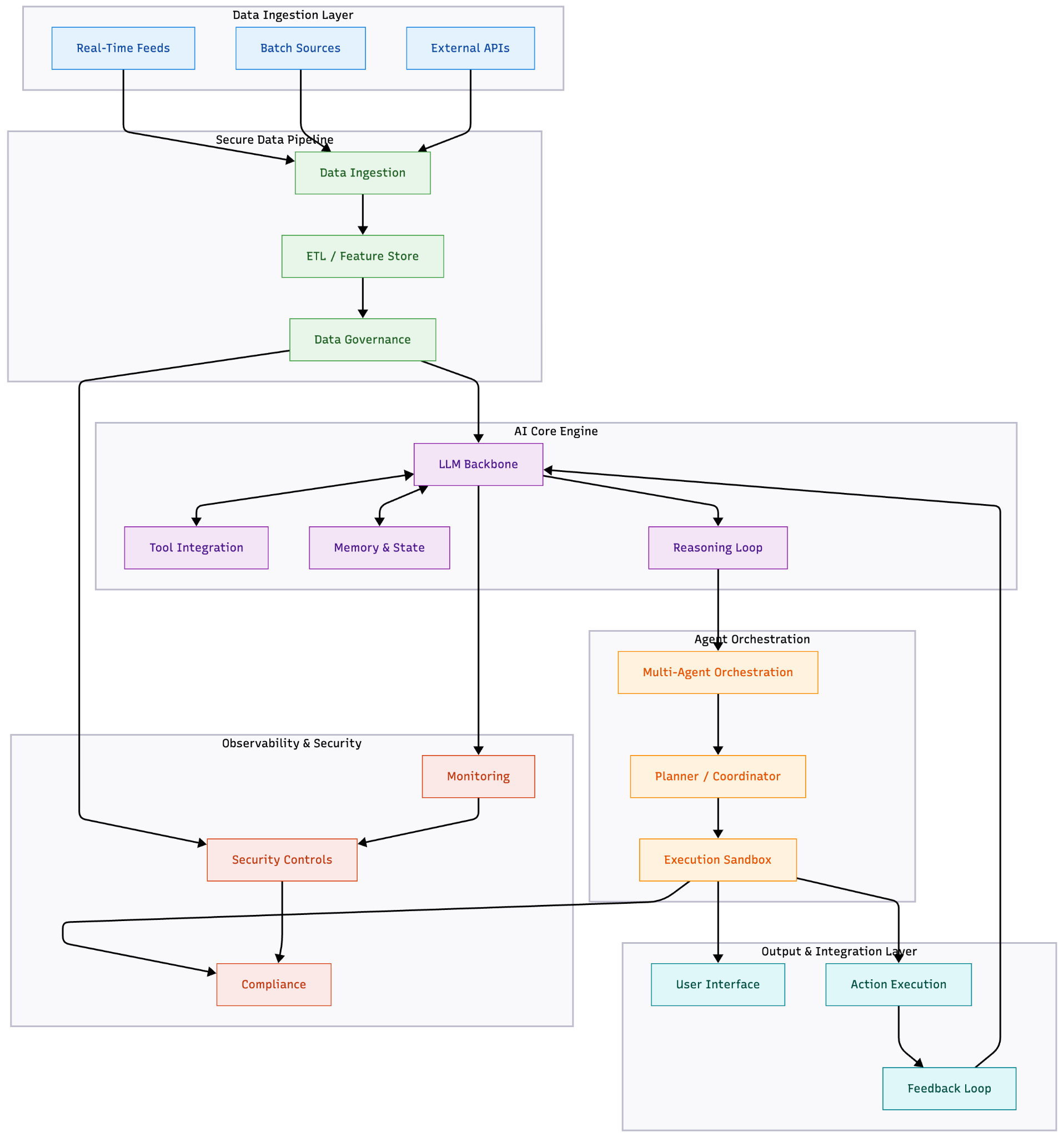

The answer lies in a carefully designed architecture. This section breaks down the end-to-end data and logic flow, from secure ingestion to a sandboxed, human-in-the-loop execution layer.

A high-level architectural overview of an AI-driven financial agentic system, illustrating the data flow and core components from ingestion to compliant output.

This architecture outlines a robust, end-to-end system for deploying autonomous AI agents within the financial sector. It is designed to be secure, scalable, and auditable, moving beyond simple chatbots to agents capable of complex reasoning and action.

Let’s break down the flow, component by component.

This is the foundation that feeds the agent. It pulls data from all necessary sources. AI agents need real-time and historical financial data to “observe” the environment. Finance data is high-volume, time-sensitive, and regulated.

Owner: Data platform.

KPIs: data freshness, schema drift rate, and ingestion latency.

This layer provides data sanitation, enrichment, and governance, ensuring all financial inputs meet security and compliance requirements. You cannot process raw financial data without this mandatory scrubbing and validation step.

Compliance lives here. All data is encrypted and anonymized upfront to avoid PII leakage and meet audit and regulatory requirements.

Owner: Security + data governance.

KPIs: PII leakage incidents, access review pass rate, audit log completeness.

This is the decision-making core. At its center sits the LLM backbone – typically a fine-tuned GPT, Llama, or Claude model optimized for financial data. However, an LLM on its own should not be trusted for precise computation without tool-backed verification. To fix this, we wrap the model with three critical capabilities:

Memory: This layer provides the agent with both long-term memory and short-term conversational state.

Tool integration: The LLM is enhanced with external tools—so it doesn’t “guess.” It calls calculators, risk engines, SQL databases, and financial APIs to execute precise operations (e.g., Black-Scholes pricing via SymPy or portfolio optimization via QuantLib).

Reasoning loop: ReAct pattern = Observe → Think → Act → Repeat. For example, a fraud agent observes a transaction, reasons “high amount from new device,” acts by blocking and alerting, and then logs the rationale.

Owner: ML platform.

KPIs: task success rate, tool-verification pass rate, and evaluation regression rate.

In finance, a single agent isn’t enough—you need a coordinated team. Multi-agent setups let each agent focus on what it does best while a central controller keeps everything organized.

Owner: Application/platform engineering.

KPIs: workflow latency, failure retry rate, cost per run, sandbox block rate.

This is where the rubber meets the road. Once a plan is finalized and sandboxed, it moves into the Output Layer for execution and user interaction.

Owner: Product engineering + integration teams.

KPIs: action success rate, rollback frequency, human override rate.

This layer ensures the system remains auditable, resilient, compliant, and secure—a must in finance, where zero breaches equals zero trust.

Observability (the control plane for agentic systems): In banking, you need to know what an agent did, why it did it, and what data it used. The minimum observable unit isn’t a single model output; it’s the full execution trace of an agent run:

This is where tools like W&B Weave become valuable. Weave logs agent runs end-to-end, support evaluation and regression testing across versions, and makes it easy to compare behavior before and after a change.

Owner: SRE/SecOps + risk.

KPIs: MTTD/MTTR, audit completeness, policy violation rate, evaluation regression rate, and % of production runs with complete trace coverage.

AI agents are rapidly reshaping the finance and banking sector by enhancing autonomy, intelligence, and adaptability. Instead of relying only on rigid rule-based systems, these agents can understand real-time data, make sense of complex financial situations, interact with tools and APIs, and continuously learn from feedback.

Here are some of the key areas where AI agents are already making a meaningful impact.

1. Fraud detection & prevention

Modern financial systems handle massive streams of transactions every second. AI agents help make sense of this chaos by scanning activity in real time, spotting anomalies, and taking action before fraud escalates.

AI agents analyze transactions, user behavior, device details, and merchant history. Instead of relying on fixed rules, they generate dynamic risk scores using LLM-based reasoning and anomaly detection models.

When something looks suspicious, the agent can instantly block a transaction, trigger an extra KYC step, or hand the case to a human analyst. With a built-in memory component, these agents also learn new fraud patterns over time.

For example, if a user suddenly initiates rapid micro-transactions, the agent may review prior behavior, query internal risk APIs, and decide whether to flag or block the activity, while automatically documenting the rationale.

Real-world examples:

For a long time, truly personalized financial planning was accessible only to high-net-worth individuals. AI agents are changing that. They act like always-available financial co-pilots, giving everyday users the kind of tailored guidance that once required a dedicated advisor.

These agents learn from a user’s spending patterns, financial goals, and risk tolerance, and not just market data. That lets them move from passive alerts to proactive, context-aware guidance.

Proactive goal alignment: The agent monitors cash flow and assesses whether the user is drifting from key goals. If it spots something like overspending, it suggests a concrete action, not just a warning. “You’ve gone 20% over your dining budget. To stay on track, consider moving $150 from your contingency fund to savings on Friday. Approve?”

Contextual investment nudges: By analyzing the user’s portfolio alongside real-time economic signals, the agent can recommend small rebalances or adjustments while remaining within the user’s defined Investment Policy Statement (IPS).

Real-world examples:

These examples show how AI agents turn defense into offense, saving billions in potential losses.

AI agents streamline underwriting by automating the full workflow from collecting documents, extracting features, running risk models, and checking compliance, to generating an explainable decision package. This enables near-instant lending decisions while keeping every step auditable.

Traditional underwriting is rigid and rule-based. Agentic systems make it adaptive, transparent, and scalable, especially when multiple micro-decisions are involved (income check → bank flow validation → scoring → pricing → review routing).

Real-world examples:

In trading, milliseconds matter. AI agents can bridge the gap where human oversight falls short by scanning markets in real time, using reinforcement learning and news sentiment to inform trading and hedging decisions.

These agents function as market-aware, goal-driven financial advisors that work 24/7. By analyzing historical data and macro events, they can dynamically rebalance portfolios—shifting to defensive assets during volatility—to maximize returns while protecting capital.

Example: When a specific sector becomes overexposed due to price spikes, the agent detects the risk and immediately rebalances the portfolio to restore its intended asset allocation.

Real-world examples:

It’s not sci-fi; it’s the new normal, turning volatile markets into optimized opportunities – but it’s typically adopted later, after controls and surveillance are mature.

Compliance is often the most resource-heavy function in finance. A constant race against shifting rules, where a single missed update can mean hefty fines. AI agents change this dynamic entirely. Think of them as an always-on regulatory radar that doesn’t just beep when there’s trouble, but actually prepares the solution for you.

Instead of teams manually hunting through circulars or Basel norms, these agents continuously monitor regulator feeds. But they go further than simple alerts: they map these new obligations directly to your internal policies, identifying exactly what needs to change.

Here’s how it plays out: A new anti-money laundering rule is announced overnight. By 9 AM, the compliance team logs in to find a full breakdown from their AI agent: which internal policies are now outdated, which customers are affected, and a draft policy update awaiting their review. It turns a potential crisis into a manageable morning task.

Real-world examples:

It’s compliance as a superpower: always on, error-proof, and audit-ready.

In banking, data is king. But what happens when your royal assets are trapped in a chaotic kingdom of PDFs, scans, and even handwritten notes? From bank statements to invoices and contracts, this “dark data” is a goldmine of insights, if only you could access it.

Enter AI agents, your specialized team for taming this paper tiger. These aren’t your average bots. By combining OCR with advanced AI, they read and interpret complex financial documents in real time.

Here’s how agents revolutionize the game:

By deploying AI agents, financial institutions are finally unlocking the value hidden in their documents. They are slashing manual processing time, reducing errors, and empowering their teams with clean, actionable data for faster, smarter decisions.

Real-world examples:

These use cases highlight AI agents as the connective tissue of modern finance—scalable, intelligent, and indispensable. From fraud sentinels to doc whisperers, they’re not replacing humans but amplifying us, driving a sector that’s more resilient and client-centric.

While the promise of agentic AI in finance is immense, the path to adoption is not without its hurdles. These challenges aren’t just technical; they’re also conceptual and architectural. On one hand, we’re grappling with how to grant these agents the autonomy to be effective without introducing unchecked risks. On the other, there’s the very real architectural headache of integrating these advanced systems with the legacy infrastructure that so much of the banking world still runs on.

Here’s a look at some of the key challenges and the solutions that are emerging:

A significant concern with AI agents is their potential to “hallucinate” or make errors in reasoning. To counter this, a multi-pronged approach is necessary. This includes tool verification to ensure the agent’s outputs are grounded in reality and implementing a “human-in-the-loop” system for critical decisions.

After all, agentic AI is still not fully autonomous. For high-stakes actions, like filing a regulatory report, human review and approval remain essential. McKinsey emphasizes that robust oversight is crucial in these early stages. The use of custom-built or fine-tuned models can also improve reliability by tailoring the AI to specific, well-understood tasks.

Root-cause diagnostics: When an agent produces a sub-optimal result, the challenge is identifying where the chain broke. W&B Weave allows developers to “double-click” into the React loop to determine whether the error stemmed from a poor prompt, a malformed tool call, or a “hallucinated” interpretation of valid data.

The computational demands of sophisticated AI agents can be substantial, leading to concerns about scalability and latency. To mitigate this, solutions such as edge LLMs (running models closer to where the data is generated), caching frequently accessed data, and asynchronous processing are being explored.

However, true scalability is about more than just computational power. It also involves deep integration with a bank’s internal policies, control frameworks, and existing systems. This is a non-trivial task that requires significant change management and close collaboration between compliance, legal, risk, and technology teams. The long sales cycles for this kind of technology can also create uncertainty around the return on investment. To address this, many firms are opting for phased pilots and hybrid models where humans and AI work in tandem.

In finance, data is both the biggest asset and the biggest liability. Handling sensitive financial information requires robust privacy and security measures. Techniques like federated learning (training models without centralizing sensitive data) and differential privacy are becoming increasingly important.

Beyond security, the effectiveness of any AI, especially in machine learning-based tasks like transaction monitoring and anomaly detection, hinges on the quality of the data it’s trained on. Poor data quality will inevitably limit the AI’s effectiveness.

Navigating the evolving regulatory landscape is another major challenge. The use of AI in compliance doesn’t eliminate regulatory risk; it creates new ones, such as model risk and the need for explainability. Regulators are increasingly focused on understanding how these AI systems arrive at their decisions.

This is where governance frameworks, detailed audit trails, and sandbox testing come into play. Even if an AI agent flags a suspicious transaction or drafts a report, the “audit package”—the evidence, rationale, and decision-making trail—must be solid enough for both internal compliance teams and external regulators to trust it. Building this trust is paramount for the successful adoption of agentic AI in the financial sector.

As we stand on the brink of a new era in finance, where AI agents are becoming increasingly autonomous, we must navigate the significant ethical and regulatory landscape with care. The power of autonomous decision-making in the financial sector brings with it a responsibility to build robust systems of governance, transparency, and accountability. This is not just about avoiding negative outcomes like discrimination or a loss of customer trust; it’s about proactively building a financial future that is fair and equitable for everyone.

To truly harness the power of AI for good, financial institutions must embed ethical considerations into the very architecture of their AI systems. This means prioritizing fairness, accountability, transparency, and controllability – the FAccT principles – at every stage of development and deployment. Let’s break down what this looks like in practice.

At the heart of responsible AI is a strong ethical governance framework. This isn’t just a compliance exercise; it’s a commitment to defining clear policies for how data is handled, how user consent is obtained, and how transparent we are in our algorithmic decision-making. We’re seeing leading institutions establish dedicated AI ethics committees and appoint AI governance officers to champion these principles. Such frameworks are essential for aligning with emerging regulations, such as the EU AI Act, and for building a culture of responsibility.

It’s a well-known fact that AI algorithms trained on historical data can inadvertently learn and even amplify existing societal biases. This is a significant risk in areas like lending, where biased algorithms could perpetuate discriminatory practices. To combat this, we must be relentless in our pursuit of fairness. This involves conducting rigorous audits of our training data to identify and mitigate potential biases. By using diverse datasets and regularly testing our models against fairness metrics, such as demographic parity, we can work towards more equitable outcomes.

The “black box” problem, where the inner workings of an AI model are opaque, is a major hurdle in finance, a sector that rightly demands clear explanations for its decisions. This is where explainable AI (XAI) becomes a game-changer.

By using techniques that trace the logic behind an AI’s conclusions, such as SHAP or controlled LLM traces, we can provide clear, natural-language explanations for even the most complex decisions. This transparency is crucial for building trust with both customers and regulators.

LLM traces as transparency: While traditional XAI focuses on feature weights, agentic transparency requires a “glass box” view of the decision process. By maintaining a centralized library of Weave Traces, institutions can provide regulators with a step-by-step, natural-language rationale for any autonomous action.

While AI can automate and optimize many processes, we must never lose sight of human accountability. Clear lines of responsibility must be established for the actions of any AI agent. A “human-in-the-loop” approach ensures that AI serves as a powerful tool to aid human decision-making, not replace it entirely. This means that for critical judgments, the ultimate responsibility remains with a human expert. Furthermore, implementing “kill-switches” or containment measures is a practical step to ensure that we can intervene if an AI system behaves unexpectedly.

In the digital age, data is a significant liability if not handled with the utmost care. The foundation of any responsible AI strategy must be robust data governance and security. This involves creating and enforcing clear frameworks for data quality, consistency, and access control. Complying with regulations like GDPR and SOX isn’t just about ticking a box; it’s about fundamentally respecting customer privacy through practices like data minimization and encryption.

In agentic systems, ethics extend to autonomous actions, requiring frameworks like SymphonyAI’s principles.

We’re no longer talking about chatbots or predictive analytics. Agentic AI in finance has crossed the threshold from “helpful assistant” to operational decision support with auditable recommendations—and selective automation where policy allows.

In 2023 we built models.

In 2024 we wrapped them in APIs.

In 2025 we gave them agency

And now, in 2026, the work is making that agency safe, observable, and governable.

The roadmap to this future is already taking shape. Between 2026 and 2027, expect to see widespread pilot programs, particularly in critical areas like compliance and fraud detection. By 2028, these pilots will mature into deeply integrated financial workflows where agents operate as “digital coworkers” under policy-driven controls, with humans firmly in the loop for material decisions.

This trajectory points to a future in which AI agents evolve from supportive tools to core infrastructure, enabling them to reduce cycle time, increase coverage, and strengthen auditability when implemented responsibly. As the World Economic Forum notes, “agentic AI promises to enhance productivity, precision and decision-making, driving financial services towards deeper process autonomy.”

By 2030, AI agent markets are projected to grow significantly, but the real story isn’t just market size; it’s the operational shift toward systems that can execute end-to-end workflows with governance built in. Several key trends will define this new era:

Looking ahead, the integration of agentic AI, real-time payments, and blockchain represents a seismic shift in the banking landscape. These technologies are not just supplementary tools; they are reshaping the core operations of financial institutions. The financial world is on the brink of a new era marked by greater efficiency, innovation, and customer-centric services.

The real story of 2030 won’t be how many agents we built, but how we managed them. Infrastructure like W&B Weave will serve as the “control tower” for these digital coworkers, ensuring that as the agency grows, our ability to monitor, evaluate, and govern it scales in tandem.