For more information or if you need help retrieving your data, please contact Weights & Biases Customer Support at support@wandb.com

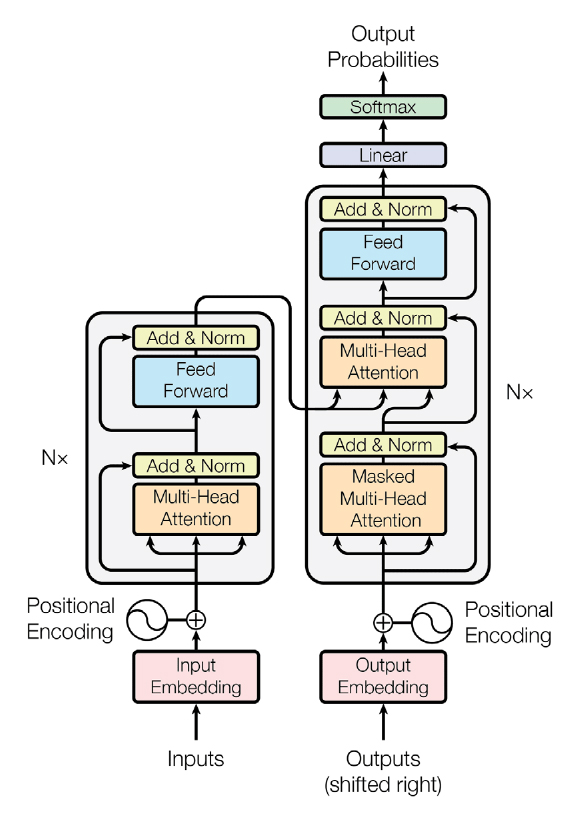

Modern LLMs are based on the transformer architecture. The

main architectural unit is a transformer block, which consists of

(at a minimum) multi-headed self attention, layer normalization,

a dense two-layer feedforward network, and residual connection. A transformer stack is a sequence of such blocks.

The below graph shows a typical transformer architecture with

an encoder-decoder structure:

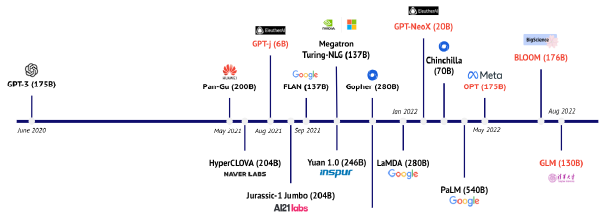

Since the advent of transformers, many architectural variants have been proposed. These can vary by architecture (e.g., decoder-only models, encoder-decoder models), by pretraining objectives (e.g., full language modeling, prefix language modeling, masked language modeling), and other factors.

While the original transformer included a separate encoder that processes input text and a decoder that generates target text (encoder-decoder models), the most popular LLMs like GPT-3, OPT, PaLM, and GPT-NeoX are causal decoder-only models trained to autoregressively predict a text sequence.

In contrast with this trend, there is some research showing that encoder-decoder models outperform decoder-only LLMs for transfer learning (i.e., where a pre-trained model is fine-tuned on a single downstream task). For detailed architecture types and comparison, see What Language Model Architecture and Pre-training Objective Work Best for Zero-Shot Generalization.

Here are a few of the most popular pre-training architectures:

Encoder-decoder models: As originally proposed, the transformer consists of two stacks: an encoder and a decoder. The encoder is fed the sequence of input tokens and outputs a sequence of vectors of the same length as the input. Then, the decoder autoregressively predicts the target sequence, token by token, conditioned on the output of the encoder. Representative models of this type include T5 and BART.

Causal decoder-only models: These are decoder-only models trained to autoregressively predict a text sequence. “Causal” means that the model is just concerned with the left context (next-step prediction). Representative examples of this type include GPT-3, GPT-J, GPT-NeoX, and OPT.

Non-causal decoder-only models: To allow decoder-only models to build richer non-causal representations of the input text, the attention mask has been modified so that the region of the input sequence corresponding to conditioning information has a non-causal mask (i.e., not restricted to past tokens). Representative PLM models include UniLM 1-2 and ERNIE-M.

Masked language models: These are normally encoder-only models pre-trained with a masked language modeling objective, which predict masked text pieces based on surrounding context. Representative MLM models include BERT and ERNIE.